By Michael Oliver

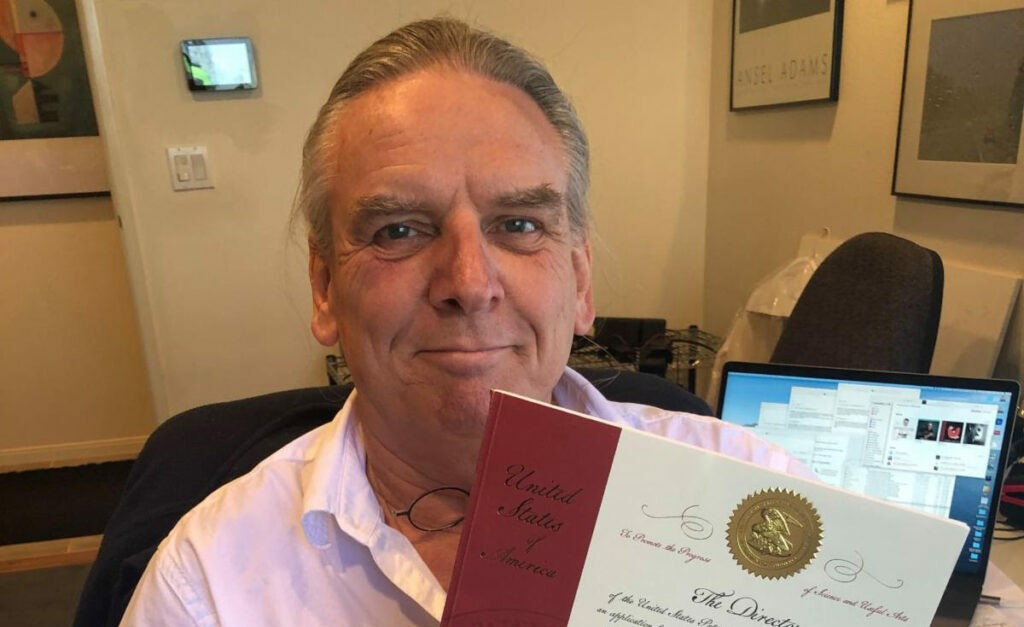

Photo Above: Mad Systems’ Maris Ensing holds one of the company’s recent patents.

With their second patent awarded in less than 10 months, the team at Mad Systems Inc. – led by company founder and engineer extraordinaire Maris Ensing – have added something new and sophisticated to the tech/creative tool box, bringing with it a flexible menu of new capabilities that can be layered into Mad’s unique AV system, Quicksilver®, or in fact any capable AV, media delivery or digital signage/wayfinding system.

According to Ensing, the new patent covers systems and methods for generating targeted media content through recognition technology. He explains, “It covers any and all applications where we’re using recognition technology to recognize individuals, groups of individuals, cars, colors, colored flags, colored stickers, and use that information to deliver media, to personalize media/information delivery or create interactive or experiential exhibits.”

The suite of technologies that can be delivered through Quicksilver, expanded by the newly patented recognition and associated technologies also extends to RFID, barcode readers, QR code solutions, hard-wired components, or a completely wireless AV system and even hybrid systems that use some or most of the above. Mad Systems’ new patented technologies were developed with just a few basic ideas in mind: personalization of experiences, privacy, speed of response, ADA compliance, Covid-19 issues and what Ensing calls “future-proofing.”

We asked Ensing about some details of the new technologies and how they open up new market opportunities and possibilities for experience design.

How does the new patent complement the patent you were awarded in 2019?

The new patent is much more fundamental than the patent granted last year. The new patent covers systems and methods for generating targeted media content. The previous patent is for systems and methods for providing location information about registered users. It is again based on facial recognition or color recognition.

How do you use encryption and speed of response to protect individual privacy while allowing this recognition technology to enhance the guest experience?

With regard to privacy and the pictures we use for facial recognition, the technology converts a picture of a person’s face into a vector diagram, and then encrypts the vectors so that the only thing the system needs to keep is that vector diagram. The engine that does the comparison works only in that encrypted domain, so you can’t even get an unencrypted version of that data out once it’s been converted. Even if somehow it were possible to de-encrypt it, you cannot take a vector file and recreate a face from it. Also, with our system, there is no need for an internet link, and if desired the data can be stored in a locked room. Finally, the system’s back end is capable of running SQL. When you scrub an SQL database, which is done at the end of each day, there is nothing left behind. In other words, this data is entirely secure; the privacy of the individual’s picture complete.

In terms of response speed, our technology is very fast. In comparison, using an internet link to a remote recognition system is not only less secure, it is also very slow. The time from initiating a stream of content and getting an identifier back can be as long as 15-17 seconds. A visitor who must wait 10 seconds or longer for their media to begin will likely move on to the next gallery or attraction before the media can even start. In comparison, by keeping the system local, our response time, including identification and the beginning of media delivery, tends to be between 300-500 milliseconds, occasionally 700 milliseconds – but always well under one second. What this means is that some of the same elements that support privacy protection for the customer also enhance the speed of response, which is crucial to maintaining the customer’s engagement.

Does the new technology support ADA compliance?

Yes, it covers such things as modifying the behavior of an interactive. For example, if a visitor is in a wheelchair, and we know that they can’t reach up (and they’ve indicated that when they log in) then the interactive can drop the buttons down and bring them to their level. This is a capability that opens up design possibilities as well as accessibility. There’s great flexibility in where the buttons can be placed, provided that for ADA purposes they bring those buttons down and they are within the zone permitted by the Americans with Disabilities Act, which is very well described.

If a visitor has vision problems, the exhibit can deliver a high contrast version of the media. Designers generally encounter so many limitations – as to colors that they shouldn’t combine, as to minimum font sizes, and so on – but when you can adapt the system, adapt the medium to your audience (e.g., changing to high contrast for the visually impaired) you’re not only meeting requirements of the ADA, you’re exceeding them. Again, we’re opening up design possibilities and accessibility at the same time.

What is the license plate recognition that is covered by the new patent?

In our search for ideas for personalizing experiences, we came across the idea of recognizing cars, especially if you know who is in those cars. We started to think about the idea of using license plate recognition, and realized how useful it is as a technology.

A basic example is selecting VIP parking at a concert, event, or a theme park. License plate recognition technology can ensure that a customer who has chosen VIP parking will not have to wait in long lines at the gate, but rather be immediately directed to the VIP parking spot.

This new technology opens up new markets with more possibilities, especially for the digital signage market, for marketing and advertising and for customer service purposes.

Have you solved the problem of facial recognition meets Covid mask?

Yes, our facial recognition systems will work with masks, and we are finishing that development work. Stay tuned for an announcement within the next few months.

What do you mean by the term “future-proofing” in this context?

That’s something we talk about as a team, on every project: How do we make sure that this is going to be okay in 10 or 20 years’ time? Well, for one thing, Mad Systems finished the integration and convergence of AV and IT years ago. Computers are running virtually everything: audio servers, video servers, digital I/O, RFID readers. The platform for computer use is huge now, spanning billions of people. So we’re confident that people will be making computers 10 years from now, 20 years from now; likely they will be faster and smaller but, since our system is designed as modular in many areas, it readily supports replacing old units with new. As long as processing performs as it does now or better – and it will – we can easily adjust, and so can our clients.

In addition, all of our latest hardware is non-proprietary. This makes it easier to acquire and install replacement units well into the future.

How might this new technology change a designer’s approach to experience design, and influence the attractions industry?

Let’s look at traveling exhibitions as an example. Traveling shows have become very important in museums and science centers, a primary market for Mad Systems. One of the challenges of traveling shows is that elements have to be reconfigured every time they travel to a new location, because each venue is different. A special challenge can arise when the exhibit depends on an elaborate, electrical harness – as many still do – which must be connected to each venue’s power supply to run the exhibit.

With our system, there are no wires; you just put things where you want to and just make sure there is power. So does it change the way you think about AV when you are designing a traveling show? Of course! And this is just one obvious example. What we are doing is so much more capable and flexible than what we could do in the past, the differences are stunning.

Basically our systems make technology more useable, more friendly, more maintainable, and more affordable, and more long-term stable. With all that, all of a sudden you have a system that is a lot more attractive, so yes, we see it making an enormous difference in the way designers and tech designers will think about a project, which translates into changed expectations all around.

You know, when a designer comes into our lab and sees what we have developed, the usual response is, “This changes everything!” and it does.

300-500 milliseconds to delivery: A hypothetical recognition scenario

A person enters a museum that has been equipped with Mad Systems’ latest facial recognition-driven AV technology. The visitor registers at the ticket counter, or perhaps self-registers at a convenient kiosk near the entrance, or may have registered online in advance. As part of registration the visitor provides a portrait or allows the system to capture a likeness.

During this process the visitor is also presented with the option to enter any of a number of items relating to their needs or preferences. These could include foreign language subtitles or audio at the various exhibits. Perhaps the visitor is an academic and requests a more advanced level of information at a particular exhibit – or knows little about the subject and desires a more introductory level of content. Perhaps there are hearing issues, vision issues, or mobility issues to accommodate.

All of these data points are stored and associated with the visitor’s encrypted equivalent of their picture, enabling them to be recognized by the system as they proceed through the exhibits, addressed by name and have media and content delivered that are specifically tailored to the information, preferences and needs inputted in the registration process.

If the visitor is in a wheelchair, touchscreen buttons will automatically lower to within their range of access. If hard of hearing, volume levels will automatically rise. If visually impaired, high-contrast video and stills will replace standard video. The need for foreign-language subtitles or audio will also be answered. And, according to Ensing’s description, the visitor receives all of this personalized content and communication in a transmission that takes well under a second – from 300-500 milliseconds to perform recognition and start personalized media delivery.

Visit www.madsystems.com

Contributing writer Michael Oliver comes to us by way of academia, as a retired literature and philosophy professor whose teaching career lasted some 28 years. Prior to the classroom, his early training and work were in engineering, which took him from nuclear missile silos in North Dakota to the Rhine River, where he worked as a ship’s engineer. Michael brings his dual background and range of experience to write about technology and other subjects for InPark.